Redesigning Educational AI

for Productive Struggle

AI tools to encourage deeper comprehension and engagement among students.

Bypassing Productive Struggle

Current AI tools (like ChatGPT) provide instant answers, potentially removing beneficial struggle crucial for learning, and developing grit and problem-solving skills (“Productive Struggle and Math Rigor”).

_edited_edited.jpg)

_edited.jpg)

Lack of Engagement and Overconfidence

There appears to be a lack of learning with certain design instances of using AI explanations (Gajos and Mamykina). Certain design interfaces may facilitate some learning, yet other design interfaces demonstrate no learning at all. Another dangerous aspect is that AI assistance can lead to overconfidence (Fisher and Oppenheimer).

_edited.jpg)

Unclear Cognitive Engagement

While tools like Harvard's CS50 AI "Duck" were highly praised by students and used frequently, it is unclear how cognitively engaging the CS50 AI Duck was for students struggling through errors. It is also unclear about how the duck may or may not have contributed to student understanding of concepts (Liu et al., 2024).

Indicators in Focus

We chose resilience and knowledge transfer as indicators of productive struggle because they reflect the two core outcomes that this pedagogy aims to build.

-

Resilience reflects persistence through difficulty, aligning with productive struggle’s emphasis on grit and effortful engagement (Sinha & Kapur, 2021).

-

Knowledge transfer—applying understanding in new contexts—is a hallmark of deep learning and improves through productive failure (Kapur, 2008; Sinha & Kapur, 2021)

How might we design AI tools that foster resilience and knowledge transfer through productive struggle?

Qualitative Study Design

We conducted a qualitative study comparing two AI educational tools: a baseline (mimicking ChatGPT's direct answer approach) and an experimental prototype (designed with pedagogical principles to encourage productive struggle). Both tools utilized OpenAI's API, specifically ChatGPT 4.0. The experimental tool employed "system" calls to train the model for its guided, non-direct responses.

Tool 1: Experimental Prototype

_Page_10_Image_0001_edited.jpg)

Not giving the answer right away

The tool will not give a student the solution to their question immediately. It will instead guide the learner through a series of conversational prompts and responses towards the proper answer.

Analogous examples

The design provides analogous examples as responses to a learner's questions, rather than answering the prompt submitted by the learner in the same context in which it was described

Guiding questions

After providing an analogous example, the tool prompts the learner with guiding questions to encourage them to take the given example and try to transfer that knowledge to answer their own question. These are presented under a "Guiding Questions" heading.

Positive and encouraging tone

The AI is instructed to be "a tutor helping students learning about computer science" and to "guide students to answer their own question". In one example, when a learner asks for the answer, the AI responds, "I'd be happy to help you work through the problem to find the answer!" This indicates an encouraging, helpful tone.

Tool 2: Baseline (ChatGPT 4.0)

_Page_11_Image_0001_edited.jpg)

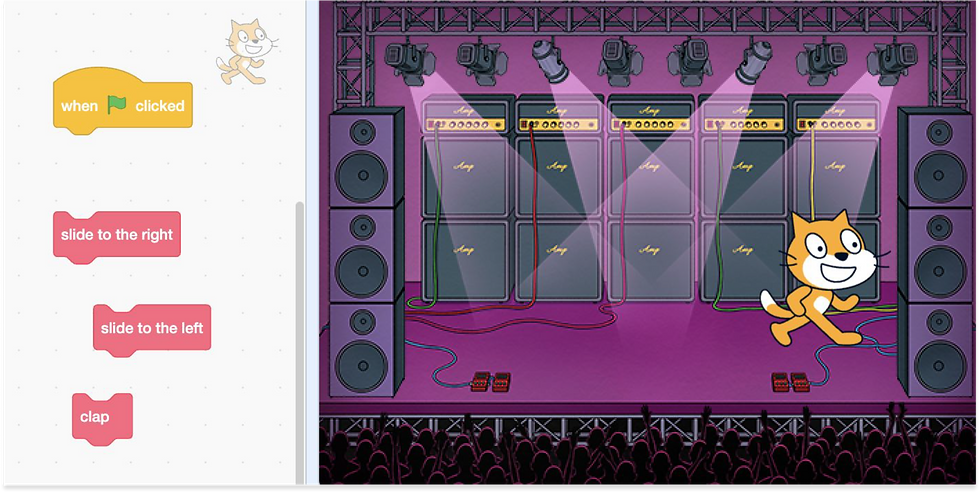

Participants, Activities, and Interviews

We recruited 8 Harvard students who self-proclaimed "limited or no prior computer science background". They engaged in two activities using the Scratch platform, designed to assess conceptual skills in computer science, specifically loops and if-else statements. Interviews, lasting approximately 45 minutes to 60 minutes, were conducted either in person or via Zoom to observe and analyze.

_Page_30_Image_0002.png)

_Page_30_Image_0004.png)

Key Insight from the study: Varying Mindsets Towards AI

User interaction and satisfaction depended on their perception of AI, categorized as:

-

"AI as a basic tool" (distrustful): Exhibited "infrequent usage with reluctance".

-

"AI as a collaborative partner" (symbiotic): Showed "frequent usage of the AI tool", often for clarifying concepts or getting starting points. Some participants also expressed "feelings of guilt associated with using the tool".

-

"AI as an expert consultant" (answer key): Displayed "infrequent, last resort usage", with interactions focused on "extract[ing] an answer and information"